Artificial intelligence already wears multiple hats in the workplace, whether its writing ad copy, handling customer support requests, or filtering job applications. As the technology continues its ascent and capabilities, the notion of corporations managed or owned by AI becomes less far-fetched. The legal framework already exists to allow “Zero-member LLCs.”

How would an AI-operated LLC be treated under the law, and how would AI respond to legal responsibilities or consequences as the owner/manager of an LLC? These questions speak to an unprecedented challenge facing lawmakers: the regulation of a nonhuman entity with the same (or better) cognitive capabilities as humans that, if left unresolved or poorly addressed, could slip beyond human control.

“Artificial intelligence and interspecific law,” an article by Daniel Gervais of Vanderbilt Law School and John Nay of The Center for Legal Informatics at Stanford University, and a Visiting Scholar at Vanderbilt, argues for more AI research on the legal compliance of nonhumans with human-level intellectual capacity.

“The possibility of an interspecific legal system provides an opportunity to consider how AI might be built and governed,” the authors write. “We argue that the legal system may be more ready for AI agents than many believe.”

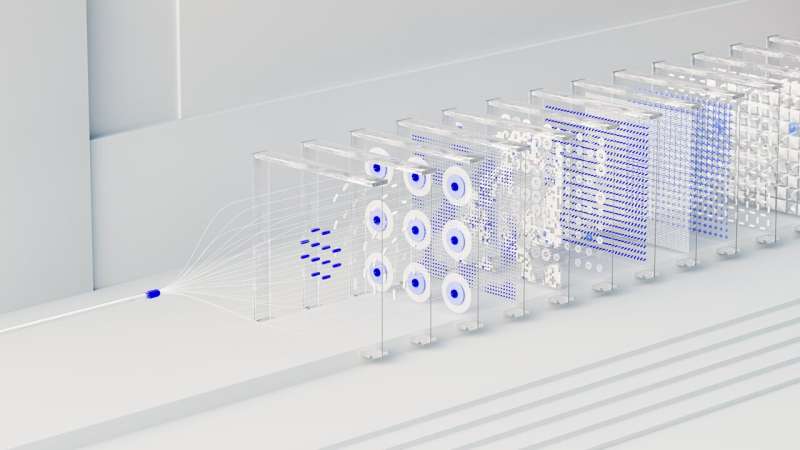

The article maps out a path to embedding law-following into artificial intelligence, through the legal training of AI agents and the use of large language models (LLMs) to monitor, influence, and reward them. Training can focus on the “letter” and the “spirit” of the law, so that AI agents can use the law to address the “highly ambiguous, or the edge cases that require a human court opinion,” as the authors put it.

The monitoring aspect of this approach is a critical feature. “If we don’t proactively wrap AI agents in legal entities that must obey human law, then we lose considerable benefits of tracking what they do, shaping how they do it, and preventing harm,” the authors write.

The authors note an alternative solution to this existential challenge: putting an end to AI development. “In our view, this hard stop will likely not happen,” they write. “Capitalism is en marche. There is too much innovation and money at stake, and societal stability historically has relied on continued growth.”

“AI replacing most human cognitive tasks is a process that is already underway and seems poised to accelerate,” the authors conclude. “This means that our options are effectively limited: Try to regulate AI by treating the machines as legally inferior, or architect AI systems to be law-following, and bring them into the fold now with compliance tendencies baked into them and their AI-powered automated legal guardrails.”

“Artificial intelligence and interspecific law” is available in the October 2023 edition of Science.

More information:

Daniel J. Gervais et al, Artificial intelligence and interspecific law, Science (2023). DOI: 10.1126/science.adi8678

Citation:

New analysis in Science explores artificial intelligence and interspecific law (2023, October 28)

retrieved 30 October 2023

from https://techxplore.com/news/2023-10-analysis-science-explores-artificial-intelligence.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.