(video caption:

Russ Tedrake likes to present an eye-opener looking into what’s possible now with robotics, and what is likely to be possible in the near future, as we see AI robots vault into our lives.

“This is a great time to be a roboticist,” he says, leading off with some words about how robots are making progress on some of the hardest jobs they can tackle.

Two big challenges, Tedrake noted in his lecture, are manual dexterity and social intelligence: robots still have a hard time with granular physical tasks, and with the kinds of intuitive interactions that form human communications in general.

“If you think about … things that we do every day, and we take for granted, (many of them are) incredibly hard,” Tedrake said. “But we’re making lots of progress.”

Robots are learning fast!

In terms of manual dexterity, he said, engineers are using visuomotor policies and working with pre-trained perceptual networks to develop what’s called a ‘learned state representation’ in order to plan actions.

Robust feedback, he said, is important for these models.

He also mentioned a shift in thinking, from traditional methods around reinforcement learning, to something called ‘behavioral cloning’ – the latter model, he said, will take in inputs in the form of actions and generate results.

“We’ve been playing with behavior, cloning, with visuomotor policies for tasks, again, that would have been considered out of scope,” he said.

As for architecture, Tedrake mentioned diffusion policy, where the program learns a distribution over possible actions.

Visuomotor policies

Robots making pizza

Three examples

Robots making food

Diffusion Policy

Diffusion models (generative AI)

Learns a distribution (score function) over actions

Making pizza

Summary

He used the example of an AI robot making a pizza: rolling dough, for example, in a way that previous generations of experts would have thought unlikely, and sprinkling cheese, and spreading sauce.

“We can make pizzas with robots now, with a dexterous robot, not just a factory, but actually a dexterous robot doing all the steps,” he said, also showing the robot working precisely with a bowl of noodles.

“The big question is, how do we feed the data flywheel?” he asked. “What we really want is to say: I’ve trained a bunch of skills .. I’ve trained (n) skills… Now, I want to have a new skill that I’ve never seen before, how quickly can I adapt? How do we feed that pipeline? And what are the scaling laws?”

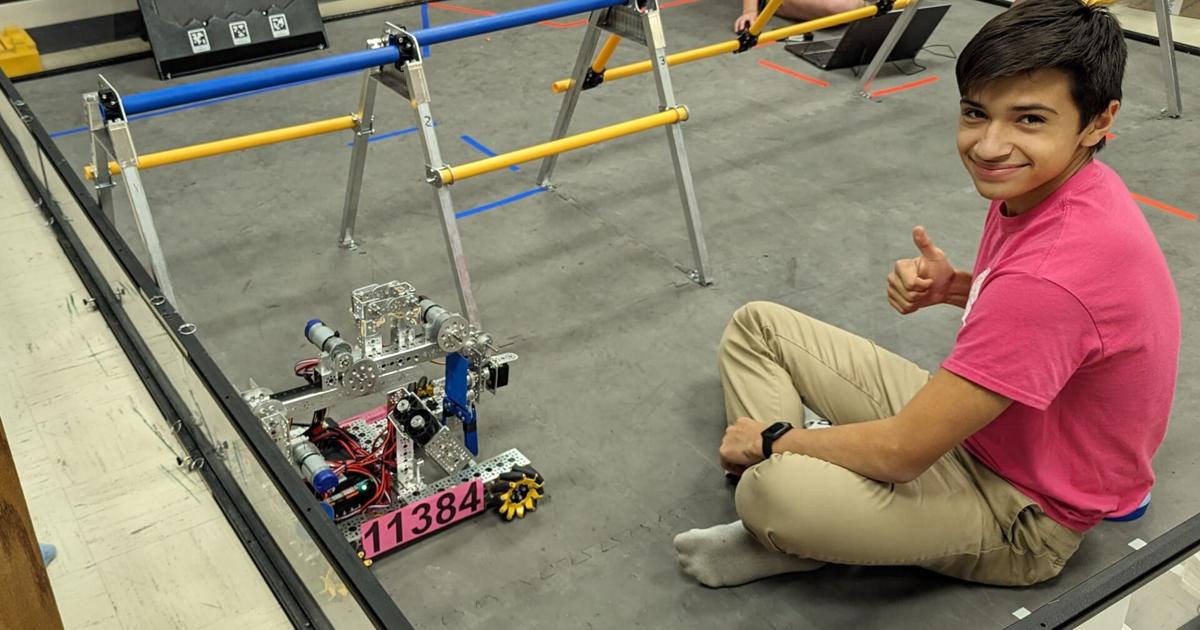

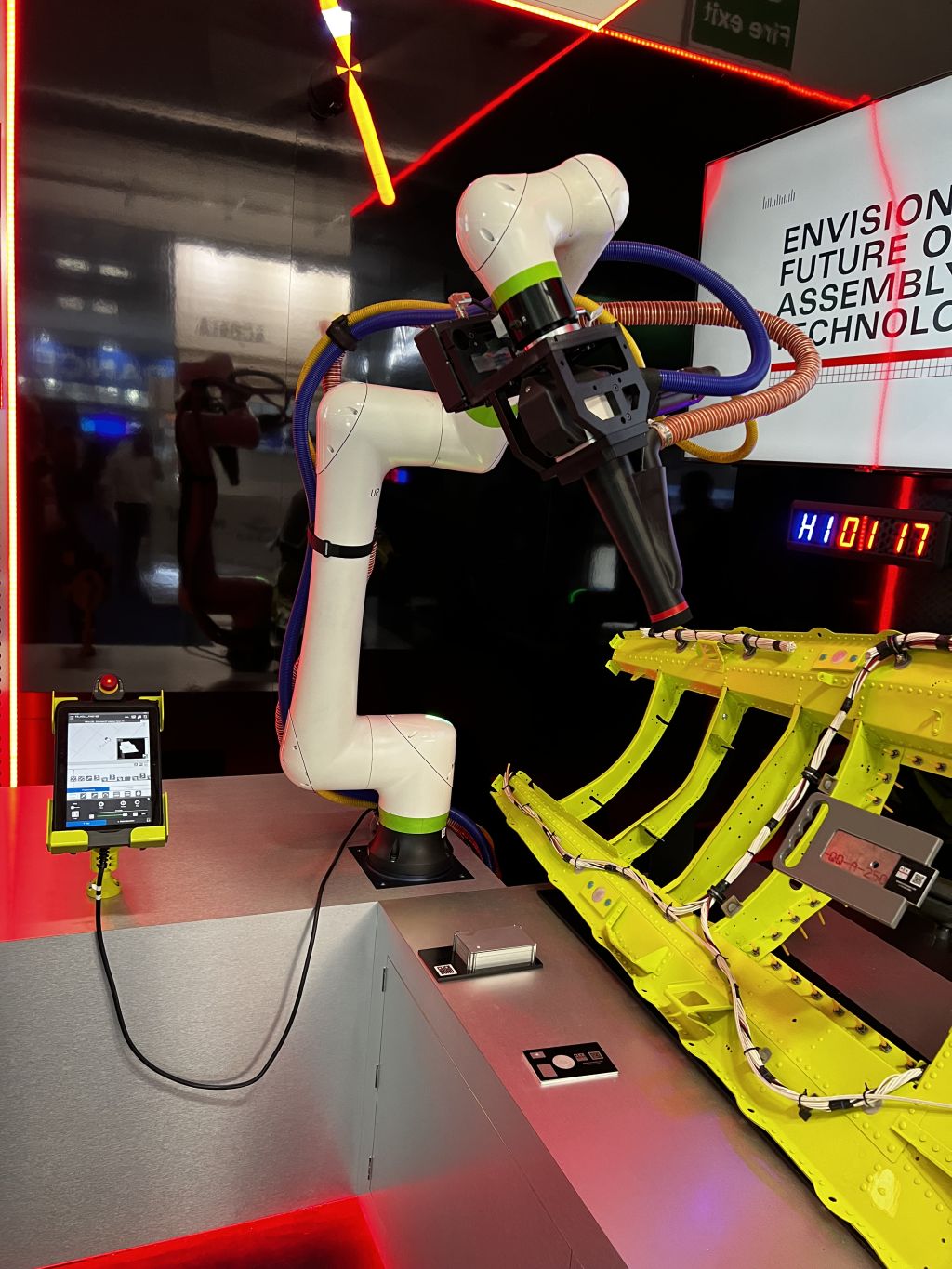

(image caption: New approaches, Tedrake believes, can yield important efficiencies)

He talked about advanced context simulations, and advanced motion planning, as elements of a new approach that is likely to empower a great shift upward in robot capabilities. Using a set of graphs of convex sets as an example, Tedrake went over some of the game rendering and other applications that will be interesting as engineers continue to push the envelope in AI and robotics designs, for example, in using certain kinds of AI to inform others.

“It’s been generally agreed upon that computer game quality rendering is good enough to train a computer vision system,” he said. “But it has not yet been accepted that computer game quality physics can train the manipulation system… but we’re building really advanced context simulations. And seeing that ‘sim to real’ transfer. We’ve also been continuing to invest in advanced motion planning and thinking about how rigorous control can be connected with … these generative AI models.”

All of that, he showed, leads to real robotics boosts.

“We now have extremely good planning solutions,” he said. “We have planners that can … solve big complicated robots, doing time-optimal, distance-optimal paths, in complicated collision-free environments, though contact – all solved with convex optimization… we can now, in simulation, feed the pipeline, generate lots and lots of great ground truth demonstrations, and train a generative model.”

Exploring some of the ways that human learners are taking advantage of these nascent models, Tedrake showed, for example, how a robot tool can consistently enforce a desired state, with specialized challenges forming the context for its work, so that the robot work starts to look, in some ways, more human.

“So maybe this is one of the bets: maybe I can generate enough beautiful plans in simulated environments, to generate the visual motor maps to replace the human demonstrations and train my generative models.”

Dexterous manipulation skills, he said, are still generally unsolved, and there’s a lot more work to do, but today’s robots, he suggested, are learning fast.

All of the code, Tedrake noted, is open source, so interested people can log on and see what others are doing around this kind of advancement, which has the potential to make robots so much more advanced.

“The progress is incredibly fast,” he said. “I told you about visuomotor diffusion policies, which started with imitation learning from humans. And we’re feeding the pipeline with advanced simulation and planning and control.”

Tedrake is an MIT professor and Vice President of Simulation and Control at the Toyota Research Center.

Portrait of Russ Tedrake